A Surge in NeRF

A Surge in NeRF

News

My post on volume rendering is out! (link)

My post on NDC space is out! (link)

Overview

neural radiance field (NeRF)

extended to other fields such as generative modeling (GRAF, GIRAFFE, DreamField), relighting (?, NeRF-OSR), scene editing (CCNeRF), etc.

focus on nvs/reconstruction

Background

What do we want?

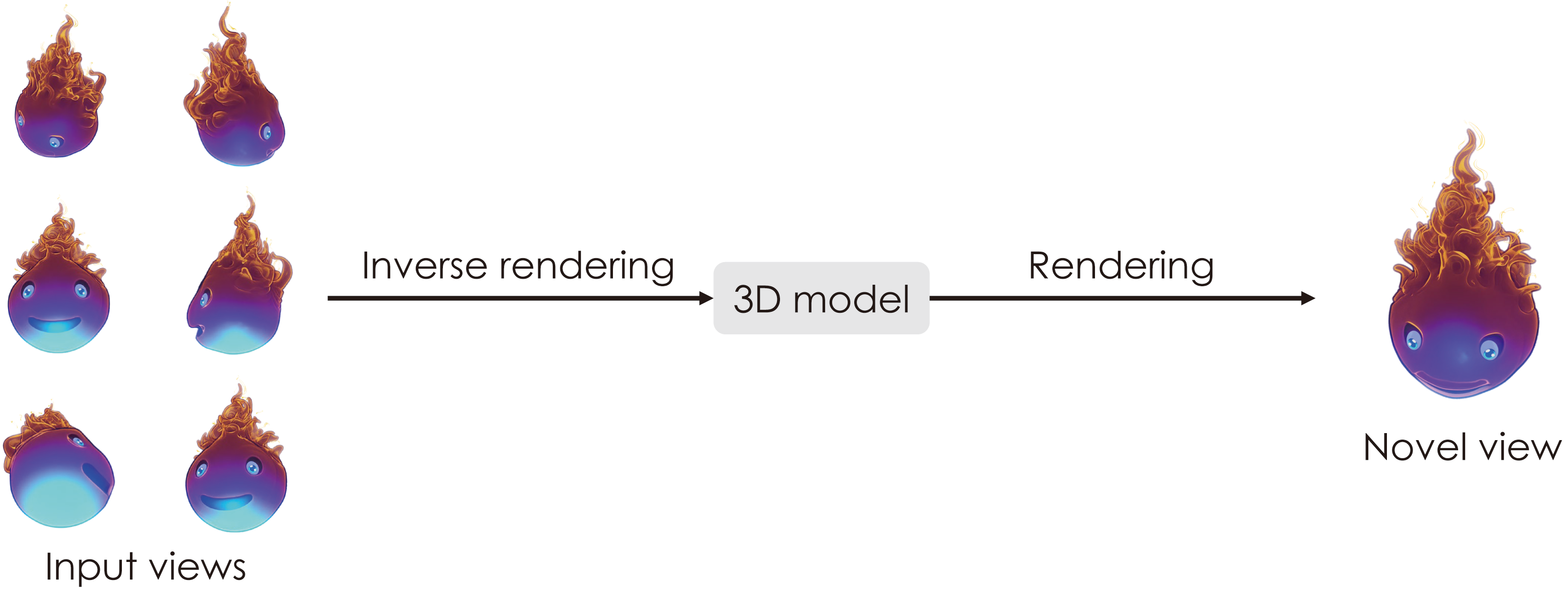

Novel view synthesis (NVS) refers to the problem of capturing a scene from a novel angle given a few input images. With downstream applications to modeling, animation, and mixed reality, NVS is fundamental to the computer vision (CV) and computer graphics (CG) fields.

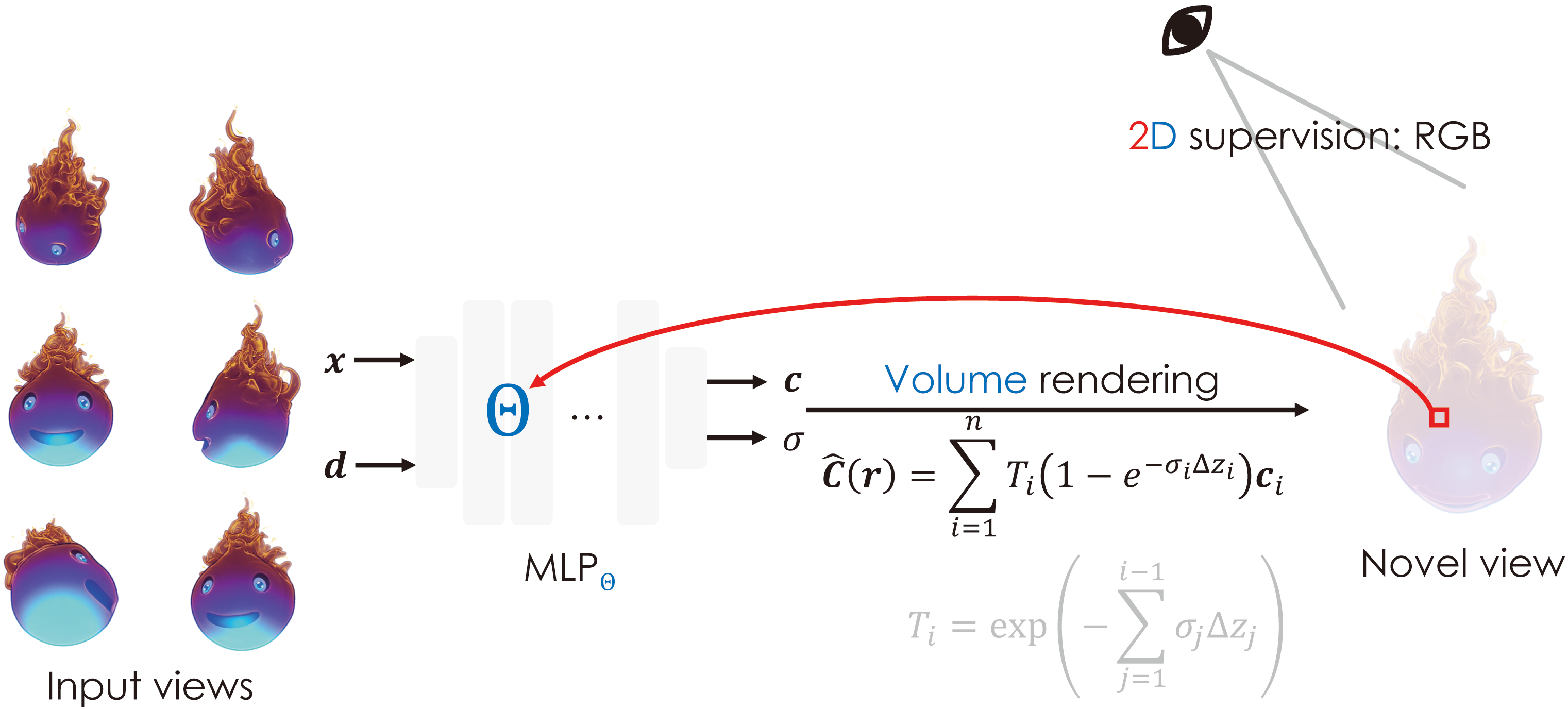

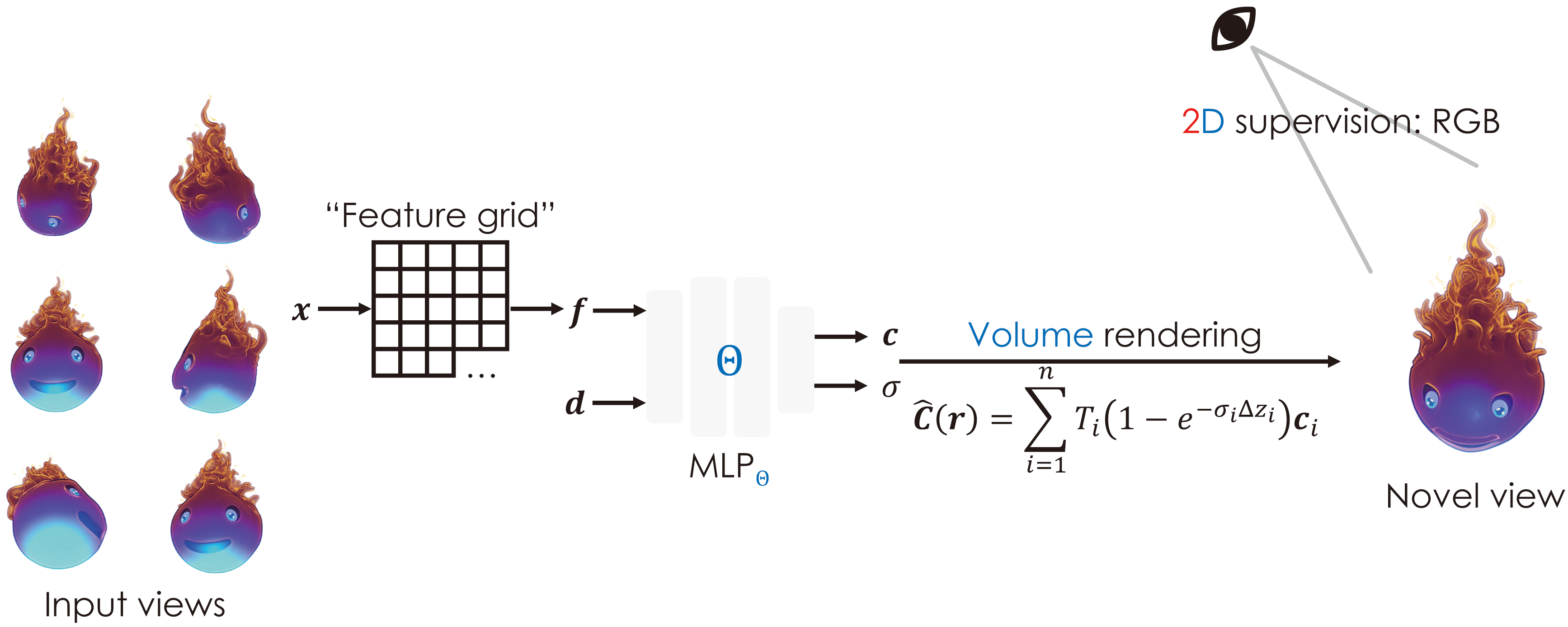

The problem is typically attacked in two stages: inverse rendering — constructing a 3D representation from input images — and rendering — mapping high-dimensional to pixel colors of a raster image.

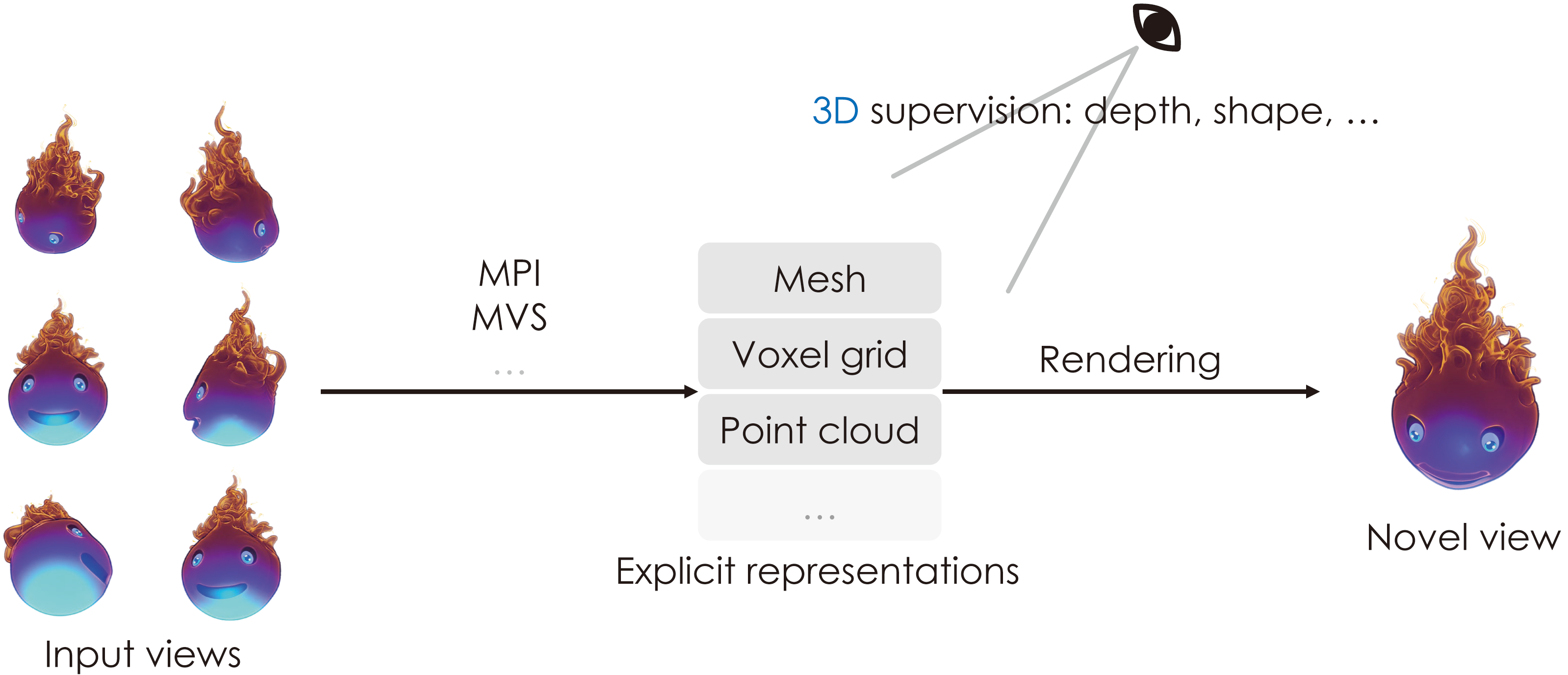

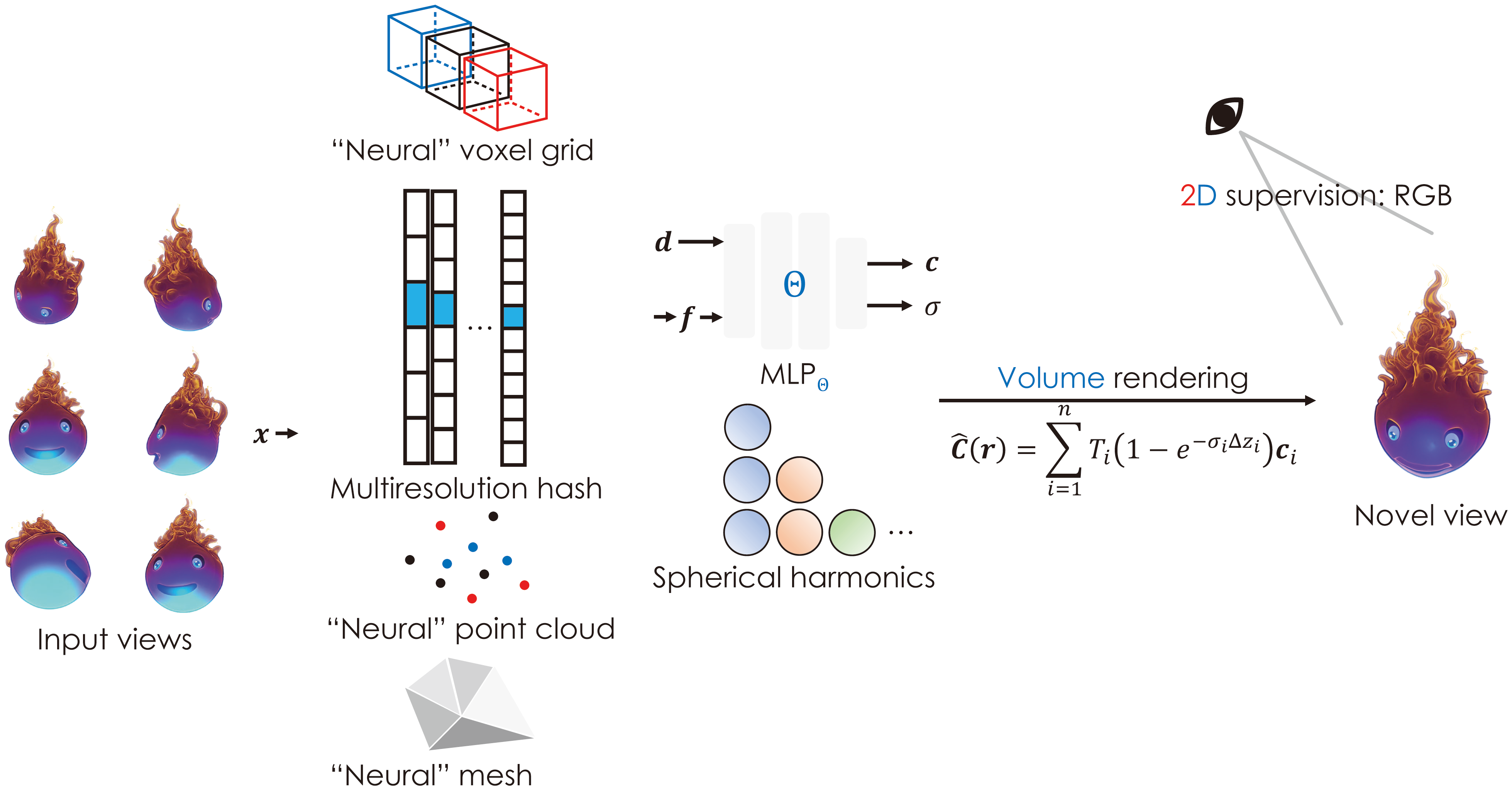

CV for inverse rendering A 3D model can be explicitly represented by mesh, point cloud, voxel grid, or multi-plane images (MPI). This renders learning-based solutions to other pertinent problems qualified for reconstruction, such as structure from motion (SfM) and multi-view stereo (MVS). Nonetheless, those approaches are often dependent on direct supervion, where 3D ground truths are time-consuming to obtain. Explicit representations are also memory-demanding. Hence, learning-based CV schemes hardly scale to real-world scenes.

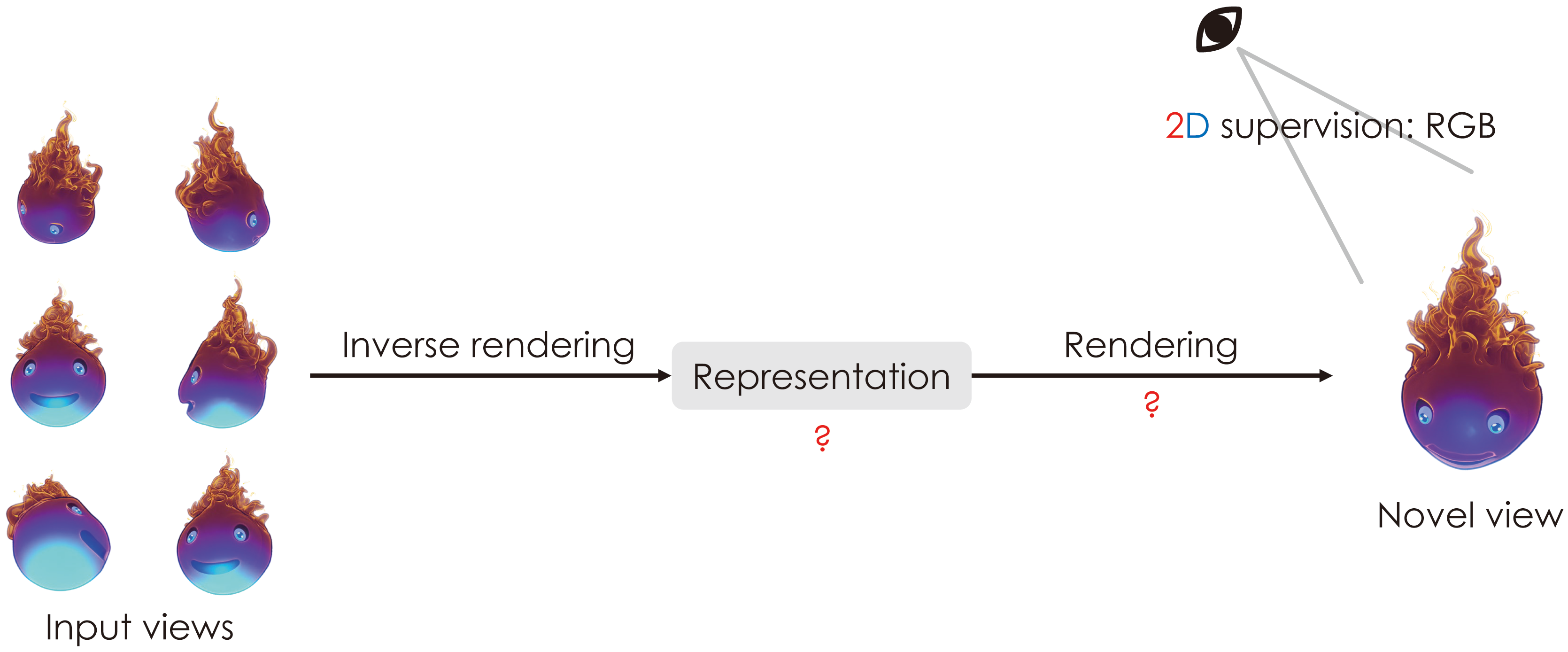

Is there a way to learn from 2D supervision? Is there a way to reduce memory footprint of 3D representations? This is where NeRF steps in. Before proceeding to its thrival, there is a (significant) obstacle to overcome — the rendering process must be differentiable. Otherwise, gradients cannot propagate back to the geometric representation, and the network never congerges.

Differentiable rendering

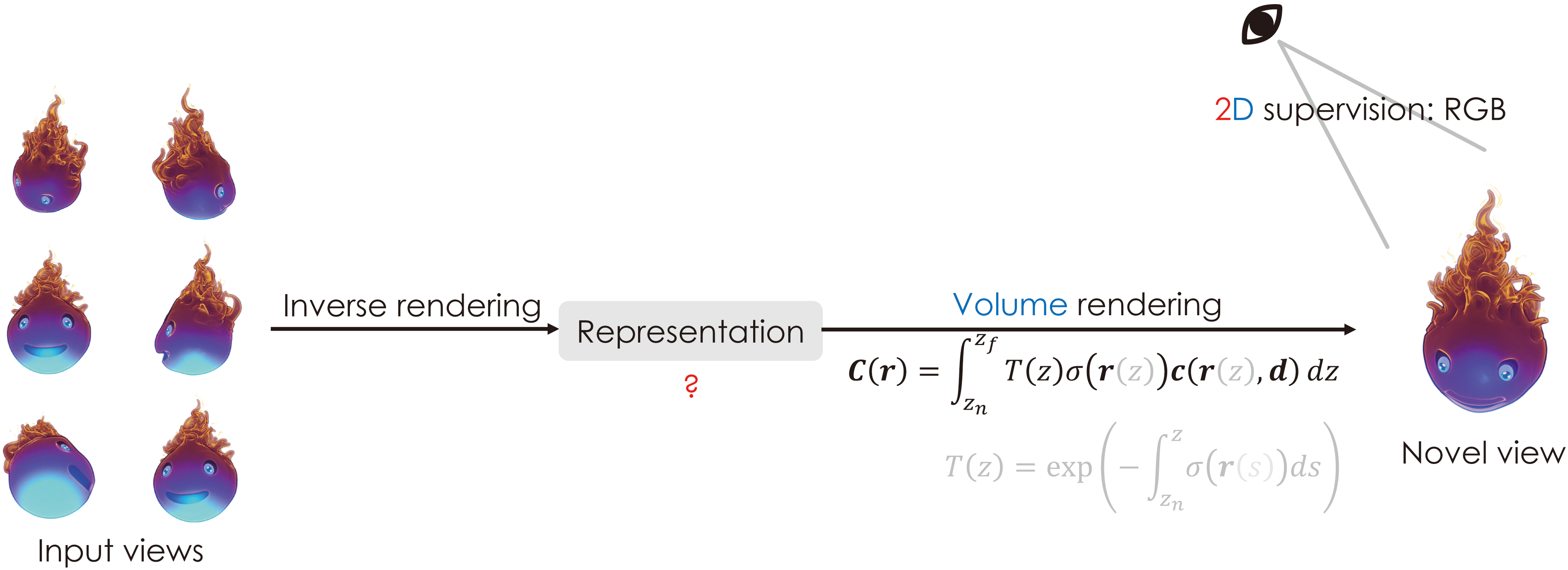

Classical graphics pipelines leverage matrix operations on triangular (or polygonal) meshes for a raster image. This process is not differentiable in that gradient w.r.t. geometry is either hard to compute or unhelpful. 3D representations for differentiable rendering tend to be implicit. DVR, with a misleading[1] title, differentiably renders a pixel given implicit surfaces. An alternative is volume rendering, where a ray "casts" to a volumetric representation, and colors are "cummulated" by

given "volume density" and color .

Ray tracing? Ray casting? Ray marching!

Volume rendering is an image-ordered approach. For every pixel, a ray ejects from the camera, passes through the pixel center, and "casts" to the volumetric representation. Unlink ray tracing, it does not reflect off surfaces. Rather, it marches through the entire volume. This is reminiscent of ray casting, widely applicable in medical imaging. On the constrary, it does not intend to reveal the internal structure of "volume data". What we want is the color of that pixel. Such a novel approach is referred to ray marching.

Info

personal view, may be controversial

not strictly "physical"

Analysis

The above integral demands the continuity of and , making the volumetric representation essentially a scalar field.

MLP as radiance field

Entirely implicit? A step back…

"Men are still good."[2]

mip-NeRF, mip-NeRF 360, ref-NeRF

Summary

References

CS184/284a by UC Berkeley

CS348n by Stanford University

DIVeR: Real-time and Accurate Neural Radiance Fields with Deterministic Integration for Volume Rendering

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding

Mip-NeRF: A Multiscale Representation for Anti-Aliasing Neural Radiance Fields

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

Neural Sparse Voxel Fields

PlenOctrees for Real-time Neural Radiance Fields

Plenoxels: Radiance Fields without Neural Networks

Point-NeRF: Point-based Neural Radiance Fields

Ref-Nerf: Structured View-Dependent Appearance for Neural Radiance Fields

NeRF(神经辐射场)有相关的物理(光学)原理支撑吗?

Errata

| Time | Modification |

|---|---|

| Sep 18 2022 | Pre-release |

| Oct 16 2022 | Initial release |

? ↩︎

"Men are still good" is an ending line from the film Batman v Superman: Dawn of Justice conveying Bruce Wayne's faith in mankind. It is cited here to imply that pure MLP representations of radiance field are not (at all) inferior to "hybrid" rpresentations, in terms of quality, of course. ↩︎